Originally posted on Mr. Dr. Science Teacher:

Updated 13 July 2020 – Mid COVID-19 In the Spring of 2020 teachers and students around…

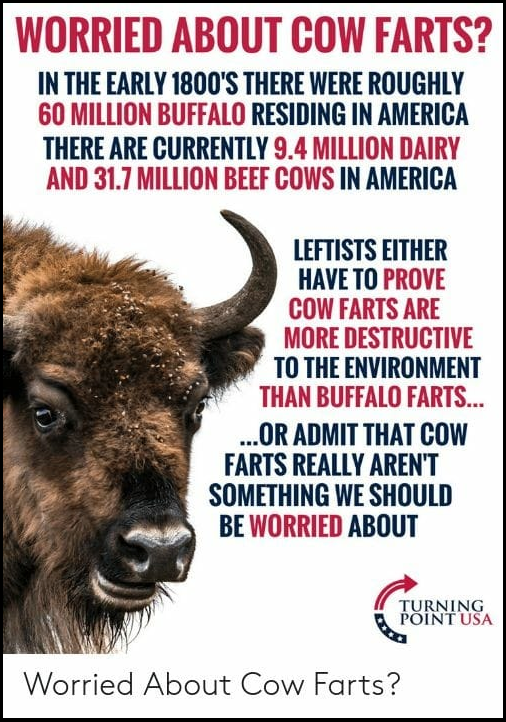

Bison vs Cow Greenhouse Gas “Emissions”

This misleading bison-cattle comparison is making the rounds again. First, it’s important to note that methane (CH4), the gas in…

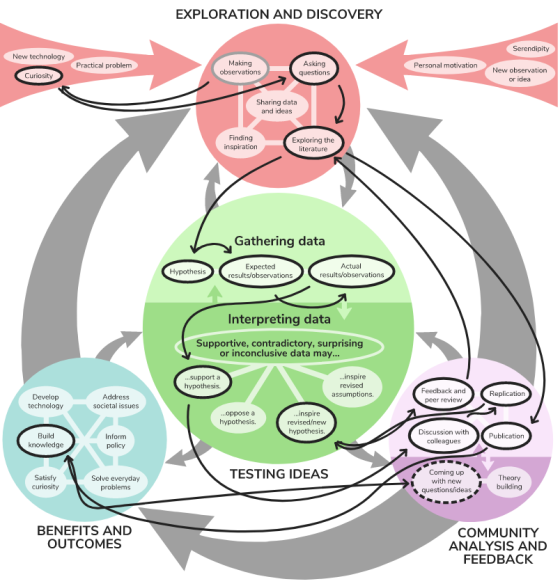

Oversimplifying the Nature of Science: Thoughts for the Beginning of the School Year

There are two components of the Nature of Science that I hit hard at the beginning of each year at all…

My Classes are Pointless

Updated 13 July 2020 – Mid COVID-19 In the Spring of 2020 teachers and students around the world were thrust…

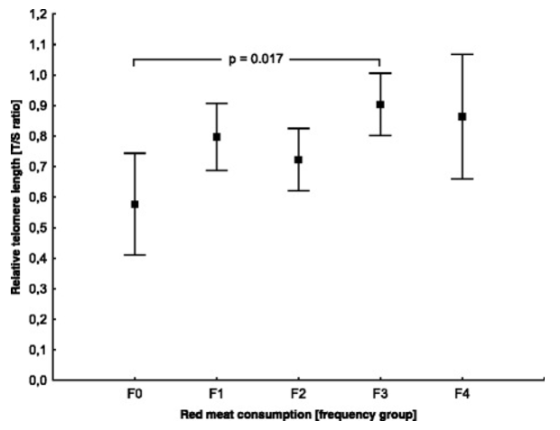

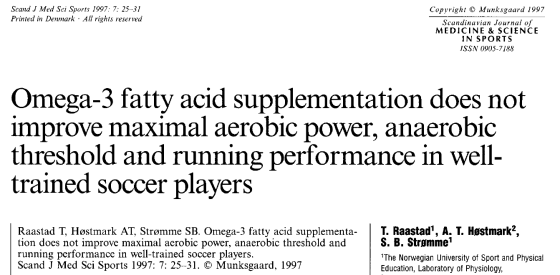

Detecting Pseudoscience (bad science) in Published Papers: Case Study #2

In a previous post titled Glyphosate Pseudoscience, I investigated a journal article in which the authors claimed that indirect consumption of…

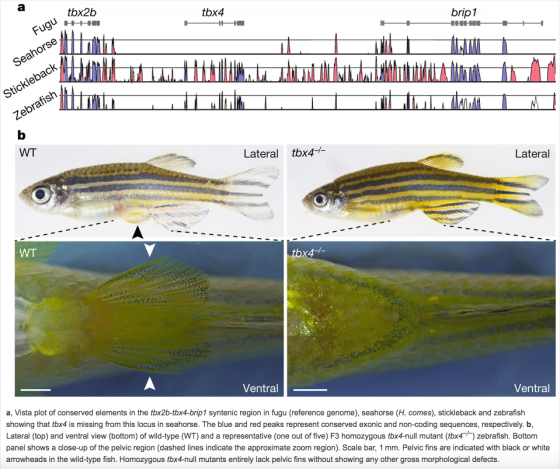

Seahorse Evolution and The Nature of Science

A recent article in the journal Nature on the evolution of novel phenotypes in seahorses presents a great example of the Nature…

No, you don’t have a D in my class.

As I continue my journey trying to deemphasize grades in my high school Biology classes (read more about it here and…

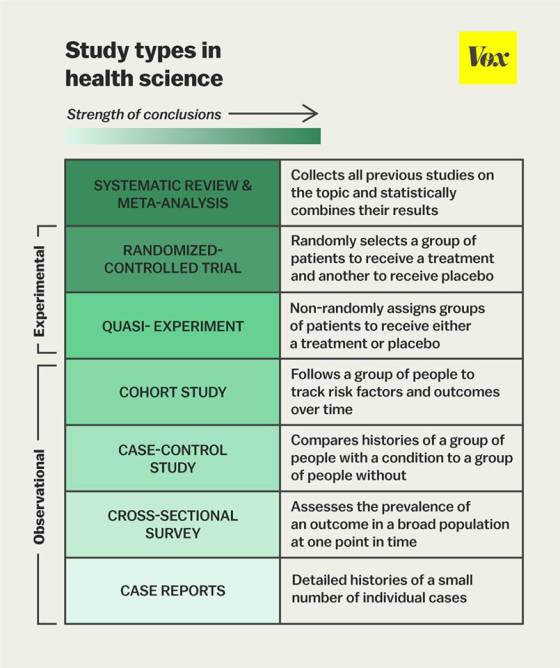

Acupuncture Study as a Cure for Pseudoscientific Thinking

This is a new version of an earlier post from January 2015. First, A Basic Lesson in Pseudoscientific Thinking As…

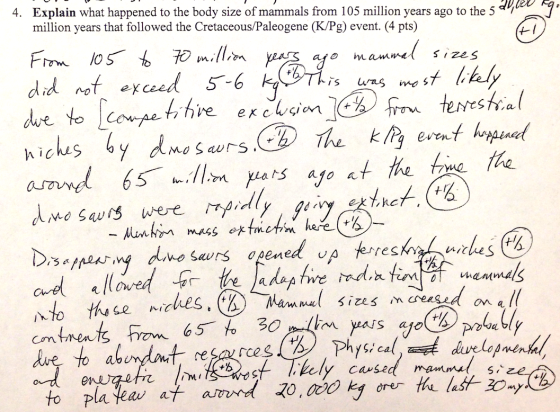

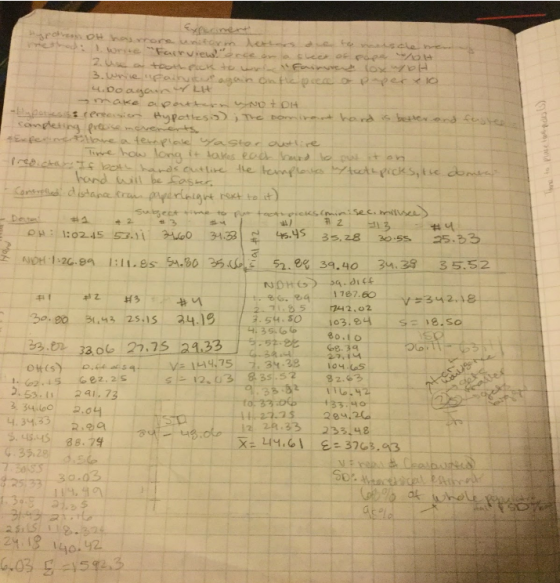

Generalizing vs Explanatory Hypotheses: How do we use them in Practice?

The following post is an extension of a much earlier post of mine called Teaching the Hypothesis. If you haven’t…

“Science is not belief, but the will to find out.” – Anon

Soon after I first met my Father-in-Law, Ted, I learned that one of his greatest pleasures in life was to…